LawBurst: Court Rules Fair Use Permits Training LLMs on Purchased Books

A complicated victory for both Anthropic/Meta and creators

Last week, a federal judge in the Northern District of California handed the AI industry a significant win regarding the use of copyrighted material to train large language models (LLMs). In Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson v. Anthropic PBC, the court held that training LLMs on copyrighted books constitutes fair use—so long as the books were lawfully acquired. In other words, the ruling doesn’t give carte blanche to go pirating. Just two days later, a different federal judge in the Northern District of California granted summary judgment to Meta (Facebook) in a parallel AI copyright infringement case, reaching a similar conclusion.

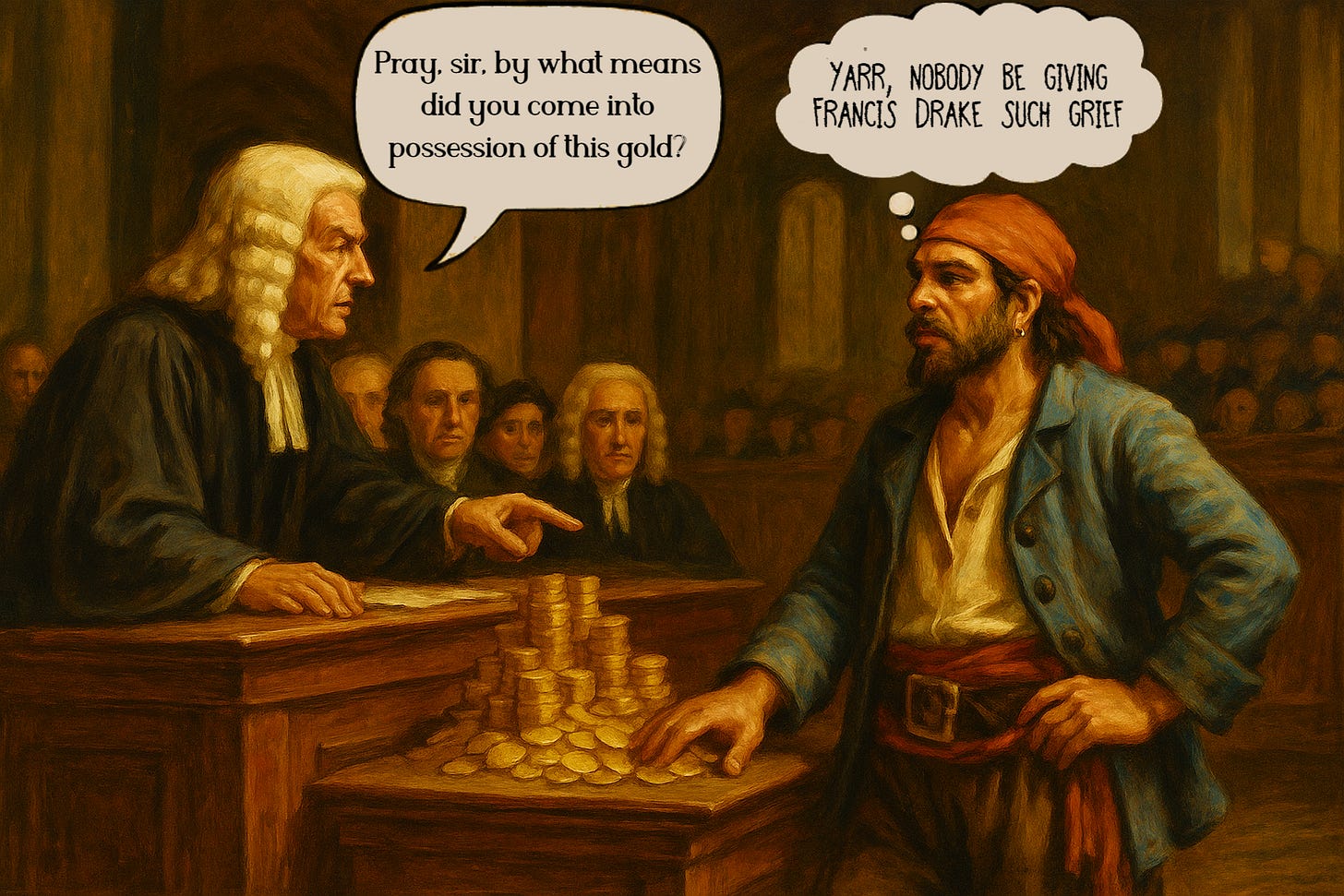

Taken together, these were significant rulings as they mark the first decisions to provide clarity on how AI companies can color within the lines as they train their models. But make no mistake—they don’t give free rein to swashbuckling AI firms eager to plunder the open seas of online content. Let’s dig into the courts’ reasoning—and what it means for those trying to build in this fast-moving space.

I. The Case: Bartz, Graeber, Johnson v. Anthropic PBC

A. Background

In 2024, a group of authors filed a class-action suit against Anthropic, alleging that the company had used pirated copies of their books to train its chatbot, Claude. They claimed their works had been downloaded from shadow libraries like Books3, LibGen, and PiLiMi without their permission. They were purchased in print, then scanned and digitized (destroying the originals).

Anthropic admitted to downloading and storing these materials, but argued that the use was protected under the “fair use doctrine” — a legal principle that permits limited use of copyrighted material without permission for purposes such as commentary, criticism, education, or transformative uses. For a refresher on the “fair use doctrine,” check out a prior post.

According to Anthropic, feeding books into an AI model was “quintessentially transformative.” The point wasn’t to replicate or compete with the original books—it was to teach the model how to write and reason like a human. The company also emphasized that its model did not reproduce specific works but rather generated original content. The intent was not to copy or mimic the original pieces and so was not a threat to said authors.

Anthropic’s library strategy was twofold:

To Train LLMs: It used selected books to train and fine-tune Claude, aiming to improve fluency, tone, and reasoning.

Amass a “Forever” Research Library: It maintained a massive, searchable internal repository of books—over 7 million, including pirated works—even if they weren’t immediately used for training.

It’s the 2nd part of the strategy that was problematic.

B. The Ruling

The judge had no problem with:

Using lawfully purchased books for training: Anthropic’s use of lawfully purchased books—even when scanned and digitized—qualified as fair use under U.S. copyright law. The training process was deemed “spectacularly transformative.” The judge likened it to a person reading thousands of books to learn how to write better. The model, Claude, didn’t reproduce or redistribute content—it synthesized new outputs based on patterns.

Digitizing printed books for internal use: The court compared this to converting a personal bookshelf into a searchable digital format. As long as the books were legally purchased, scanning them for internal AI training was not considered infringement.

However, Judge William Alsup ruled against Anthropic on the pirated material. Yarr.

The judge rejected the notion that downstream transformative use could cleanse the initial wrongdoing. Coupled with bad facts that Anthropic’s own communications revealed that it chose not to license books in order to avoid what its CEO described as a “legal/practice/business slog.” Such shortcutting undermined the fair use defense. We’ll have to await the court to rule on damages.

II. Kadrey v. Meta

A. Background/Ruling

The Meta case has a similar fact pattern to that of Anthropic: authors including Sarah Silverman, Ta‑Nehisi Coates, among others sued Meta for copying their books from “shadow libraries” to train its LLM model known as Llama.

The court granted Meta’s motion and dismissed the authors’ copyright claims tied to LLM training. Judge Chhabria applied a 4-factor fair use test of which 3 of the 4 weighed in Meta’s favor:

Factor 1 (Purpose and Character): Point for Meta. Training Llama to generate new content, not to replace books, was a “highly transformative” use. Meta’s commercial motive and use of shadow libraries didn’t outweigh the shift in purpose.

Factor 2 (Nature of the Work): Point for the authors. Their books were creative, but this factor carried little weight ultimately.

Factor 3 (Amount of Work Used): Point for Meta. Copying full books was “reasonably necessary” to teach the model how to handle long-form, structured content.

Factor 4 (Market Effect): Point for Meta—but with caution. Plaintiffs showed no real evidence of lost sales or regurgitated outputs.

Caution this with the fact that the court flagged that if LLMs were to flood the market with similar works and undercut authors indirectly, this factor could weigh in favor of future plaintiffs.

Ultimately, the judge implied to his ruling should be taken with a giant giant of salt: Meta won not because its use was clearly lawful, but because the plaintiffs “made the wrong arguments.”

III. Recent U.S. Copyright Office Report

These rulings land just weeks after the U.S. Copyright Office (USCO) released a report—cheered by many creators—that rejected the notion of inherent fair use in Generative AI (GenAI) training and tackled the key question: Is using copyrighted content without permission to train AI models considered fair use?

Its answer: …maybe. The USCO wrote that such a determination would be fact specific but courts should focus on the (i) transformative use and (ii) market effects to determine such use of copyrighted works.

For example, if an AI model is used for noncommercial purposes and doesn’t reproduce the copyrighted material in its outputs, that may weigh in favor of fair use. But if the model were trained on pirated data or if its outputs compete with the original work, expect heavy legal scrutiny. The USCO drew a clear line between benign, internal uses and high-risk practices that copy expressive works from pirate sources to generate unrestricted outputs — particularly when licensing options are available.

Crucially, the USCO rejected the argument that training on copyrighted content is inherently fair use, cautioning that language models don’t just learn meaning—they absorb the arrangement and structure of linguistic expression. If a model memorizes and regurgitates protected material, that could constitute infringement.

The USCO also avoided recommending compulsory licensing, favoring instead the emergence of voluntary and extended collective licensing systems to manage the complexity of content ownership and permissions. This framework could offer a scalable path forward, especially as courts begin grappling with the scope of market harm caused by GenAI applications.

And although the report isn’t binding, it’s already appearing in court filings. Its emphasis on provenance, market impact, and licensing alternatives reinforces the direction courts like that of Alsup and Chhabria appear to be heading.

IV. Takeaway

Bottom line: you gotta pay tribute for what you use. You can’t Captain Hook your way through training LLMs.To amass your treasure, you can’t loot the open seas of creative content without a proper Letter of Marque (i.e. you pay for using content).

These rulings are a partial victory for both creators and AI companies—and they chart out a map for future legal battles. Courts agree that LLM training is transformative, but how you get your training data still matters. Judge Alsup took a piece-by-piece approach, flagging piracy as a dealbreaker. Judge Chhabria looked at the end goal alone, but warned that economic harm could still torpedo a fair use defense.

Future plaintiffs should focus on provenance and market harm (bring better data like surveys, sales drops, or dilution studies) to tip the scale in this battle.

This is still the beginning of this battle between creators and AI companies. Expect appeals in both cases and a range of interpretations from other jurists until clearer appellate guidance emerges. Either way, these are the early days on the great battle on the high seas of GenAI and fair use.

The ships are built. The flags are raised. Grab the popcorn—this one’s going to be a blockbuster

.

Resources:

The Ruling: https://fingfx.thomsonreuters.com/gfx/legaldocs/jnvwbgqlzpw/ANTHROPIC%20fair%20use.pdf

Anthropic Scores a Landmark AI Copyright Win—but Will Face Trial Over Piracy Claims - Wired: https://www.wired.com/story/anthropic-ai-copyright-fair-use-piracy-ruling/

Pirates, Privateers, and Civil War Maritime Laws - Library of Congress: https://blogs.loc.gov/law/2020/05/pirates-privateers-and-civil-war-maritime-laws/

Copyright and Artificial Intelligence Part 3: Generative AI Training - US Copyright Office: https://www.copyright.gov/ai/Copyright-and-Artificial-Intelligence-Part-3-Generative-AI-Training-Report-Pre-Publication-Version.pdf

US Copyright Office Issues Report Addressing Use of Copyrighted Material to Train Generative AI Systems - Schulte Roth: https://www.srz.com/en/news_and_insights/alerts/us-copyright-office-issues-report-addressing-use-of-copyrighted-material-to-train-generative-ai-systems

Mixed Anthropic Ruling Builds Roadmap for Generative AI Fair Use - Bloomberg Law: https://news.bloomberglaw.com/ip-law/mixed-anthropic-ruling-builds-roadmap-for-generative-ai-fair-use

Anthropic and Meta fair use rulings on AI model training - McDermott Will & Emery: https://www.mwe.com/insights/anthropic-and-meta-fair-use-rulings-on-ai-model-training/#:~:text=Comparative%20implications,tip%20the%20scales%20next%20time.

Northern District of California Judge Rules That Meta’s Training of AI Models is Fair Use - Goodwin: http://goodwinlaw.com/en/insights/publications/2025/06/alerts-practices-aiml-northern-district-of-california-judge-rules

Images: All original images are AI-generated with using a combination of Dall-E, Firefly, DreamStudio and Pixlr.

Disclaimer: This post is for general information purposes only. It does not constitute legal advice. This post reflects the current opinions of the author(s). The opinions reflected herein are subject to change without being updated.